When I plan to visit a new city, say Pittsburgh, for a conference, I generally do not plan in terms of the number of footsteps I would walk, or the number of rotations the wheels of the taxi I board would turn. I plan in high-level terms like “take a taxi to the airport”, “go through TSA inspection”, “fly to Pittsburgh”, etc. Thinking in such high-level terms has obvious benefits such as making plans easy to communicate, and most importantly, making planning for longer sequence of events possible. The same argument could be applied to robot planning. In robotics, planning refers to the act of creating a sequence of states to be visited in order to reach a particular goal. Where “states” generally refers to Cartesian coordinates or robot arm joint angles. Even though planning over low-level states like joint angles and Cartesian coordinates helps the robot to plan for fine motions which require a high degree of precision, the fineness of these states make it difficult, if not impossible, to plan for longer horizon tasks; very much like planning in “footstep” space. A typical example of a long horizon task would be cleaning up a kitchen. Forcing a robot to plan in Cartesian space to clean up a dirty kitchen would simply not work . This is because, the robot would not only have to reason about its own states, but also the states of all the objects it would have to interact with, as well as the relationships between the different objects and how to decide based on these relationships on whether or not the kitchen has been successfully cleaned up. All in Cartesian space! Needless to say, this task would be computationally intractable.

It is obvious that, for robots to plan for longer horizon tasks, they would need to have a higher level representation of the domain on which they can plan (state abstraction). Or alternatively, as with my journey to Pittsburgh, they would need to have high-level actions or skills with which they can plan (action abstraction). Would these skills have to be chosen for the robot before hand or should the robot be made to generate its own sets of skills? A good argument could be made for both options. Manually choosing the robot’s skill set is a way to ensure that the robot only executes safe actions. However, depending on how domain-specific these set of skills are, you would probably have to manually create different sets of skills for different domains. On the other hand, if the robot “discovers” its own sets of skills, it is able to function in varying domains with little manual engineering. More work might however be needed to constrain the robot’s skill generation to a safe space of skills. In this post, I focus on Skill Chaining, a method for robots to generate its own set of skills to achieve a goal.

Skill Chaining

A skill is made up of 3 components; an initialization set, a policy and a termination condition. The initialization set consists of states that trigger the execution of the skill. The policy is a mapping of states to actions. It dictates the skill’s actions at each state. The termination condition indicates the probability of a skill execution terminating at states where it is defined.

A natural question at this point should be: how is a skill even created?

Given an episodic task defined over state space S with reward function R and goal trigger function T, our aim is to create a skill, s, to trigger T. We use T as the termination condition of the skill. The policy of the skill is learnt using regular reinforcement learning with reward R + additional reward in triggering T. To choose the initialization set, we build a classifier with both negative example states were the skill has been executed in and not trigger T and positive example states were the skill has been executed in and trigger T. Using this classifier, states that belong in the initialization set can then be chosen. And there it is, your well-defined skill.

The skill chaining algorithm works as follows;

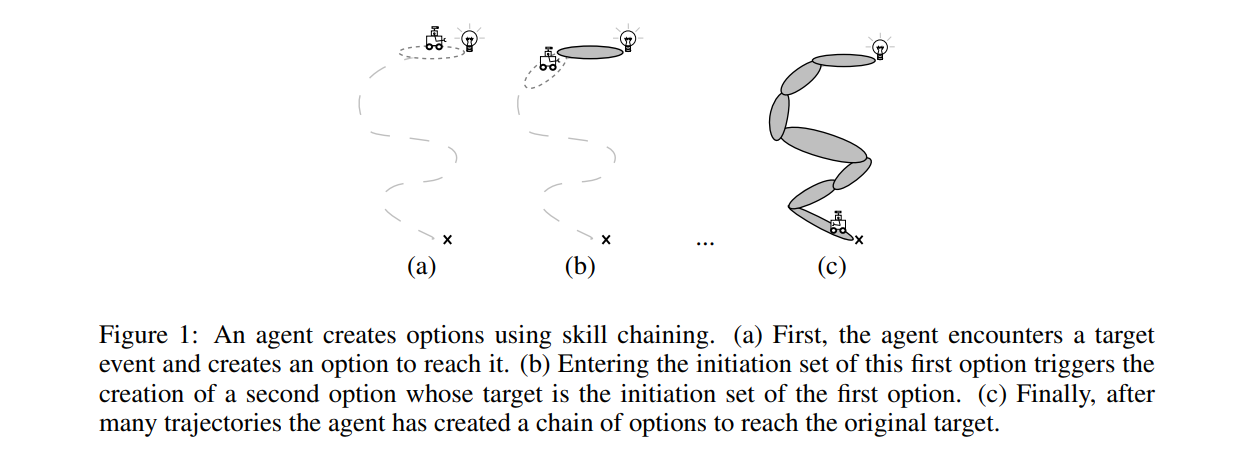

- Given an initial target event with trigger function T_0, first create a skill to trigger T_0, learn a good policy PI_0 for this skill and a good initialization set I_0

- Next, add event T_1 = I_0 to the list of target events and create a skill to trigger T_1.

- Repeating this procedure will result in a chain of skills from any state the agent may start in, to the region of the task goal.

This is best illustrated in the figure below. The figure below is from the seminal paper on Skill chaining by George Konidaris and Andrew Barto:

It is worth noting that, even though the skills are discovered in a chain, they don’t have to be used in that sequence.

Thoughts and Questions about Skill Chaining

On reading the paper in detail, the first thing that struck me was the function approximator they used for learning the skill policy. They used 4th and 5th order Fourier basis as their linear function approximators. This paper was written in 2009 so using Deep Neural Networks as the go-to function approximator was not exactly cool yet. If skill chaining were implemented with Deep Neural Networks as the function approximator for learning the skill policy, would the flexibility of DNNs make the learned skills better? How better? How exactly would they be better? Will there be a speed-up or a slow-down in the process of learning skills? Does the usage of DNNs make it easier to perform more rigorous experimental validation in more complex domains than the Pinball domain used in the original paper?

I also don’t quite understand what Figure 5 and Figure 8 are trying to depict with portions of the Pinball engulfed in shadows. [My guess is that, the illuminated regions indicate the initiation set of the skill]

Does skill chaining scale well to higher dimensional use cases like discovering skills in physical robots? Is there a follow-up paper that demonstrates this? (I couldn’t find one).

If performing skill chaining with a physical robot, what measures can be put in place to ensure that the policy learning RL procedure is safe for both the robot and the humans in its vicinity? Could the policy be learnt through other means besides RL? Could this be made possible by re-framing the policy learning problem?

What is the quality of the skills discovered by the robot? Are they generic enough to be used for different tasks in different domains or would the robot have to learn a new skill for each domain and each task? In what ways should the algorithm be improved in order to encourage it to learn generalizable skills?

The Skill-chaining paper can be found here