- Kullback-Leibler divergence (KL divergence) has its origins in information theory.

- It is a measure of how much information is lost when approximating a distribution.

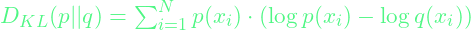

- KL Divergence is expressed mathematically as

- where p(x) is the true probability distribution and q(x) is the approximate probability distribution.

- This is essentially the expectation of log difference between the true probability distribution and the approximate probability distribution.

- This can succintly be expressed as:

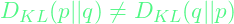

- It is important to note that KL divergence is a measure of divergence and not of distance so it is not symmetric. As such,

- A prominent application of KL divergence is in Bayesian approaches like Variational Inference and Variational Autoencoders. In such problems, analytically deriving the true posterior is intractable so the aim is to come up with an approximate distribution (usually a gaussian) and use gradient based methods to reduce the KL divergence between the true posterior and the approximate posterior by updating the approximate posterior on each backward pass.

References: Wikipedia, Blog Post by Count Bayesie